Someone said the dreaded architecture word and you’re Not-An-Architect?

Scared when someone challenges your understanding of computational complexity when all you’re trying to do is put a widget on a webpage?

Never fear – it’s probably not nearly as sophisticated or as complex as you might think.

Architecture has a reputation for being unapproachable, gate-kept, and “hard computer science”. Most of the software architecture you run into, for average-to-web-scale web apps, is astonishingly similar.

We’re going to cover the basics, some jargon, and some architectural patterns you’ll probably see everywhere in this brief architectural primer for not-architects and web programmers.

What even is a webserver?

Ok so let’s start with the basics. The “web”, or the “world wide web” – to use its hilariously antiquated full honorific, is just a load of computers connected to the internet. The web is a series of conventions that describe how “resources” (read: web pages) can be retrieved from these connected computers.

Long story short, “web servers”, implement the “HTTP protocol” – a series of commands you can send to remote computers – that let you say “hey, computer, send me that document”. If this sounds familiar, it’s because that’s how your web browsers work.

When you type www.my-awesome-website.com into your browser, the code running on your computer crafts a “http request” and sends it to the web server associated with the URL (read: the website address) you typed into the address bar.

So, the web server - the program running on the remote computer, connected to the internet, that’s listening for requests and returning data when it receives them. The fact this works at all is a small miracle and is built on top of DNS (the thing that turns my-awesome-website.com into an IP address), and a lot of networking, routing and switching. You probably don’t need to know too much about any of that in real terms unless you’re going deep.

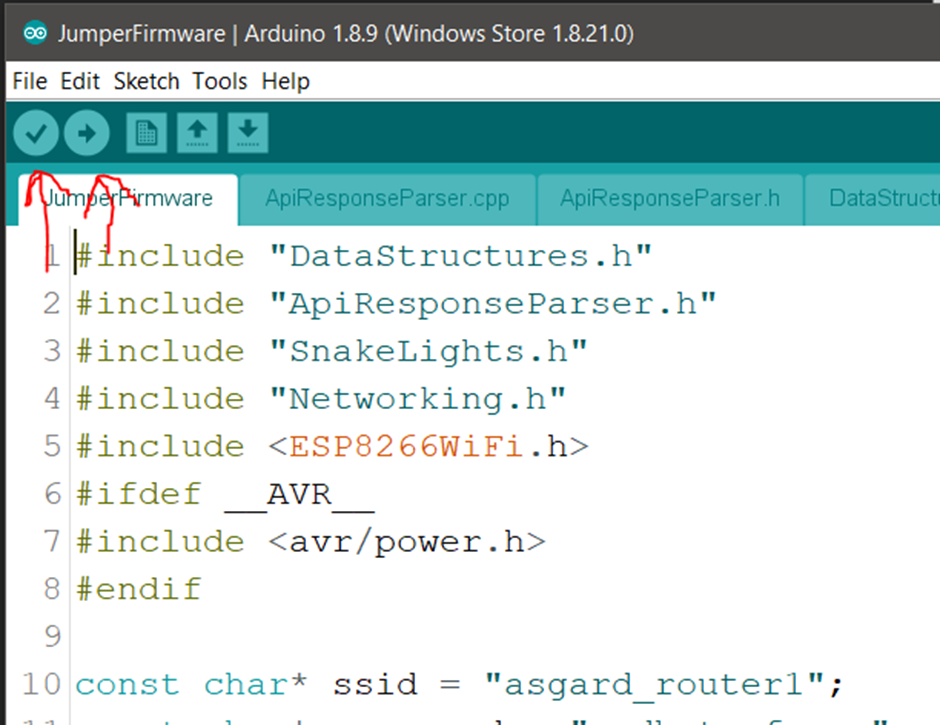

There are tonne of general purpose web servers out there – but realistically, you’ll probably just see a mixture of Apache, NGINX and Microsoft IIS, along with some development stack specific web servers (Node.js serves itself, as can things like ASP.NET CORE for C#, and HTTP4K for Kotlin).

How does HTTP work? And is that architecture?

If you’ve done any web programming at all, you’ll likely be at least a little familiar with HTTP.

It stands for “The Hyper Text Transfer Protocol”, and it’s what your browser talks when it talks to web servers. Let’s look at a simple raw HTTP “request message”:

GET http://www.davidwhitney.co.uk/ HTTP/1.1

Host: www.davidwhitney.co.uk

Connection: keep-alive

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64…

Accept: text/html,application/xhtml+xml,application/xml;q=0.9

Accept-Encoding: gzip, deflate

Accept-Language: en-GB,en-US;q=0.9,en;q=0.8

The basics of HTTP are easy to grasp – there’s a mandatory “request line” – that’s the first bit with a verb (one of GET, POST, PUT and HEAD most frequently), the URL (the web address) and the protocol version (HTTP/1.1).

There’s then a bunch of optional request header fields – that’s all the other stuff – think of this as extra information you’re handing to the webserver about you. After your headers, there’s a blank line, and an optional body.

That’s HTTP/1.1. We’re done here. The server will respond in similar form

HTTP/1.1 200 OK

Cache-Control: public,max-age=1

Content-Type: text/html; charset=utf-8

Vary: Accept-Encoding

Server: Kestrel

X-Powered-By: ASP.NET

Date: Wed, 11 Dec 2019 21:52:23 GMT

Content-Length: 8479

<!DOCTYPE html>

<html lang="en">...

The first line being a status code, followed by headers and a response body. That’s it. The web server, based on the content of a request, can send you anything it likes, and the software that’s making the request must be able to interpret the response. There’s a lot of nuance in asking for the right thing, and responding appropriately, but the basics are the same.

The web is an implementation of the design pattern REST – which stands for “Representational State Transfer”. You’ll hear people talk about REST a lot – it was originally defined by Roy Fielding in his PhD dissertation, but more importantly was a description of the way HTTP/1.0 worked at the time, and was documented at the same time Fielding was working on HTTP/1.1.

So the web is RESTful by default. REST describes the way HTTP works.

The short version? Uniquely addressable URIs (web addresses) that return a representation of some state held on a machine somewhere (the web pages, documents, images, et al).

Depending on what the client asks for, the representation of that state could vary.

So that’s HTTP, and REST, and an architectural style all in one.

What does the architecture of a web application look like?

You can write good software following plenty of different architectural patterns, but most people stick to a handful of common patterns.

“The MVC App”

MVC – model view controller – is a simple design pattern that decouples the processing logic of an application and the presentation of it. MVC was really catapulted into the spotlight by the success of Ruby on Rails (though the pattern was a couple of decades older) and when most people say “MVC” they’re really describing “Rails-style” MVC apps where your code is organised into a few different directories

/controllers

/models

/views

Rails popularised the use of “convention over configuration” to wire all this stuff together, along with the idea of “routing” and sensible defaults. This was cloned by ASP.NET MVC almost wholesale, and pretty much every other MVC framework since.

As a broad generalisation, by default, if you have a URL that looks something like

http://www.mycoolsite.com/Home/Index

An MVC framework, using its “routes” – the rules that define where things are looked up - would try and find a “HomeController” file or module (depending on your programming language) inside the controllers directory. A function called “Index” would probably exist. That function would return a model – some data – that is rendered by a “view” – a HTML template from the views folder.

All the different frameworks do this slightly differently, but the core idea stays the same – features grouped together by controllers, with functions for returning pages of data and handling input from the web.

“The Single Page App with an API

SPAs are incredibly common, popularised by client-side web frameworks like Angular, React and Vue.js. The only real difference here is we’re taking our MVC app and shifting most of the work it does to the client side.

There are a couple of flavours here – there’s client side MVC, there’s MVVM (model-view-view-model), and there’s (FRP) functional reactive programming. The differences might seem quite subtle at first.

Angular is a client side MVC framework – following the “models, views and controllers” pattern – except now it’s running inside the users web browser.

React – an implementation of functional reactive programming – it’s a little more flexible but is more concerned with state change events in data – often using some event store like Redux for its data.

MVVM is equally common in single page apps where there’s two way bindings between something that provides data (the model) and the UI (which the view model serves).

Underneath all these client heavy JavaScript frameworks, is generally an API that looks nearly indistinguishable from “the MVC app”, but instead of returning pre-rendered pages, returns the data that the client “binds” it’s UI to.

“Static Sites Hosted on a CDN or other dumb server”

Perhaps the outlier of the set – there’s been a resurgence of static websites in the 20-teens. See, scaling websites for high traffic is hard when you keep running code on your computers.

We spent years building relatively complicated and poorly performing content management systems (like WordPress), that cost a lot of money and hardware to scale.

As a reaction, moving the rendering of content to a “development time” exercise has distinct cost and scalability benefits. If there’s no code running, it can’t crash!

So static site generators became increasingly popular – normally allowing you to use your normal front-end web dev stack, but then generating all the files using a build tool to bundle and distribute to dumb web servers or CDNs. See tools like – Gatsby, Hugo, Jekyll, Wyam.

“Something else”

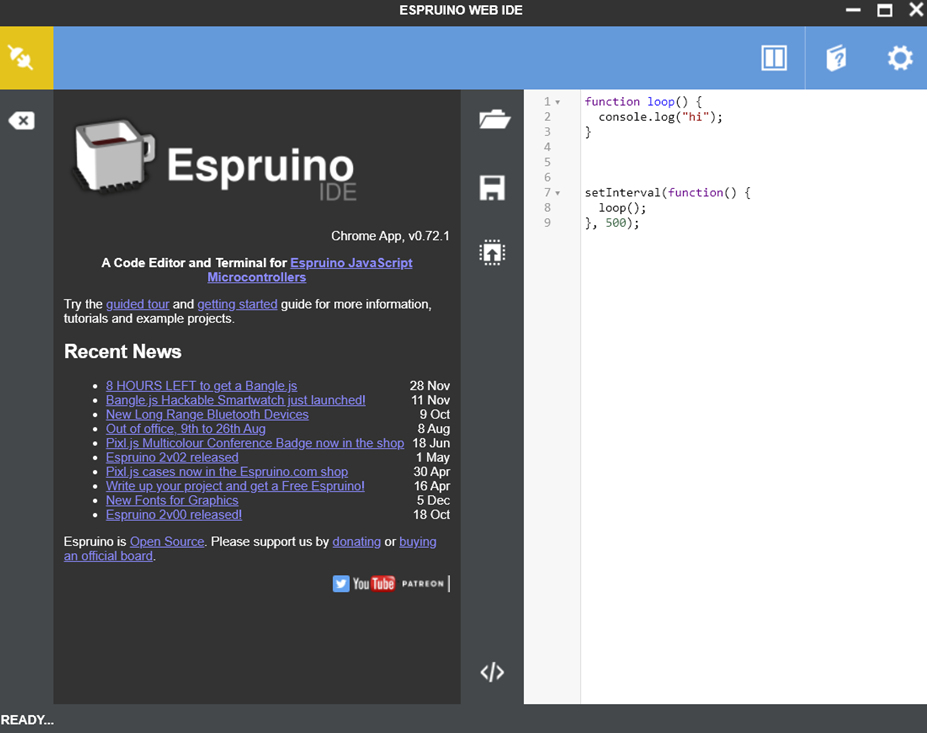

There are other architypes that web apps follow – there’s a slowly rising trend in transpiled frameworks (Blazor for C# in WebAssembly, and Kotlin’s javascript compile targets) – but with the vast popularity of the dominant javascript frameworks of the day, they all try to play along nicely.

Why would I choose one over the other?

Tricky question. Honestly for the most part it’s a matter of taste, and they’re all perfectly appropriate ways to build web applications.

Server-rendered MVC apps are good for low-interactivity websites. Even though high fidelity frontend is a growing trend, there’s a huge category of websites that are just that – web sites, not web applications – and the complexity cost of a large toolchain is often not worth the investment.

Anything that requires high fidelity UX, almost by default now, is probably a React, Angular or Vue app. The programming models work well for responsive user experiences, and if you don’t use them, you’ll mostly end up reinventing them yourself.

Static sites? Great for blogs, marketing microsites, content management systems, anything where the actual content is the most valuable interaction. They scale well, basically cannot crash, and are cheap to run.

HTTP APIs, Rest, GraphQL, Backend-for-Frontends

You’re absolutely going to end up interacting with APIs, and while there’s a lot of terms that get thrown around to make this stuff sound complicated, but the core is simple.

Most APIs you use, or build will be “REST-ish”.

You’ll be issuing the same kind of “HTTP requests” that your browsers do, mostly returning JSON responses (though sometimes XML). It’s safe to describe most of these APIs as JSON-RPC or XML-RPC.

Back at the turn of the millennium there was a push for standardisation of “SOAP” (simple object access protocol) APIs, and while that came with a lot of good stuff, people found the XML cumbersome to read and they diminished in popularity.

Ironically, lots of the stuff that was solved in SOAP (consistent message envelope formats, security considerations, schema verification) has subsequently had to be “re-solved” on top of JSON using emerging open-ish standards like Swagger (now OpenAPI) and JSON:API.

We’re good at re-inventing the things we already had on the web.

So, what makes a REST API a REST API, and not JSON-RPC?

I’m glad you didn’t ask.

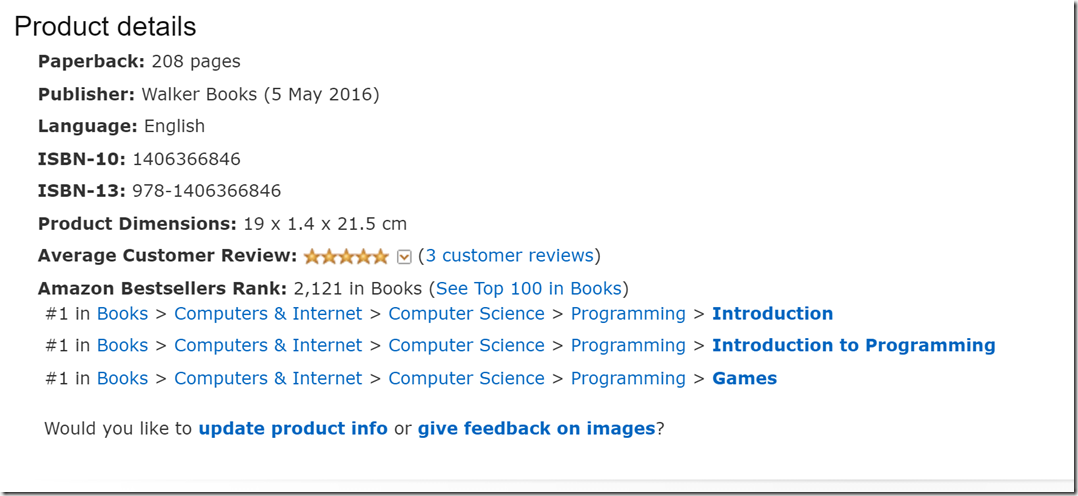

REST at its core, is about modelling operations that can happen to resources over HTTP. There’s a great book by Jim Webber called Rest in Practice if you want a deep dive into why REST is a good architectural style (and it is, a lot of the modern naysaying about REST is relatively uninformed and not too dissimilar to the treatment SOAP had before it).

People really care about what is and isn’t REST, and you’ll possibly upset people who really care about REST, by describing JSON-RPC as REST. JSON-RPC is “level 0” of the Richardson Maturity Model – a model that describes the qualities of a REST design. Don’t worry too much about it, because you can build RESTish, sane, decent JSON-RPC by doing a few things.

First, you need to use HTTP VERBs correctly, GET for fetching (and never with side effects), POST for “doing operations”, PUT for “creating stuff where the state is controlled by the client”.

After that, make sure you organise your APIs into logical “resources” – your core domain concepts “customer”, “product”, “catalogue” etc.

Finally, use correct HTTP response codes for interactions with your API.

You might not be using “hypermedia as the engine of application state”, but you’ll probably do well enough that nobody will come for your blood.

You’ll also get a lot of the benefits of a fully RESTful API by doing just enough – resources will be navigable over HTTP, your documents will be cachable, your API will work in most common tools. Use a swagger or OpenAPI library to generate a schema and you’re pretty much doing what most people are doing.

But I read on hackernews that REST sux and GraphQL is the way to go?

Yeah, we all read that post too.

GraphQL is confusingly, a Query Language, a standard for HTTP APIs and a Schema tool all at once. With the proliferation of client-side-heavy web apps, GraphQL has gained popularity by effectively pushing the definition of what data should be returned to the client, into the client code itself.

It’s not the first time these kinds of “query from the front end” style approaches have been suggested, and likely won’t be the last. What sets GraphQL apart a little from previous approaches (notably Microsofts’ OData) is the idea that Types and Queries are implemented with Resolver code on the server side, rather than just mapping directly to some SQL storage.

This is useful for a couple of reasons – it means that GraphQL can be a single API over a bunch of disparate APIs in your domain, it solves the “over fetching” problem that’s quite common in REST APIs by allowing the client to specify a subset of the data they’re trying to return, and it also acts as an anti-corruption layer of sorts, preventing unbounded access to underlying storage.

GraphQL is also designed to be the single point of connection that your web or mobile app talks to, which is really useful for optimising performance – simply, it’s quicker for one API over the wire to call downstream APIs with lower latency, than your mobile app calling (at high latency) all the internal APIs itself.

GraphQL really is just a smart and effective way to schema your APIs, and provide a BFF – that’s backend for frontend, not a best friend forever – that’s quick to change.

BFF? What on earth is a BFF?

Imagine this problem – you’re working for MEGACORP where there are a hundred teams, or squads (you don’t remember, they rename the nomenclature every other week) – each responsible for a set of microservices.

You’re a web programmer trying to just get some work done, and a new feature has just launched.

You read the docs.

The docs describe how you have to orchestrate calls between several APIs, all requiring OAuth tokens, and claims, and eventually, you’ll have your shiny new feature.

So you write the API calls, and you realise that the time it takes to keep sending data to and from the client, let alone the security risks of having to check that all the data is safe for transit, slows you down to a halt.

This is why you need a best friend forever.

Sorry, a backend for front-end.

A BFF is an API that serves one, and specifically only one application. It translates an internal domain (MEGACORPS BUSINESS), into the internal language of the application it serves. It takes care of things like authentication, rate limiting, stuff you don’t want to do more than once. It reduces needless roundtrips to the server, and it translates data to be more suitable for its target application.

Think of it as an API, just for your app, that you control.

And tools like GraphQL, and OData are excellent for BFFs. GraphQL gels especially well with modern JavaScript driven front ends, with excellent tools like Apollo and Apollo-Server that help optimise these calls by batching requests.

It’s also pretty front-end-dev friendly – queries and schemas strongly resemble json, and it keeps your stack “javascript all the way down” without being beholden to some distant backend team.

Other things you might see and why

So now we understand our web servers, web apps, and our APIs, there’s surely more to modern web programming than that? Here are the things you’ll probably run into the most often.

Load Balancing

If you’re lucky enough to have traffic to your site, but unlucky enough to not be using a Platform-as-a-Service provider (more on that later), you’re going to run into a load balancer at some point. Don’t panic. Load balancers talk an archaic language, are often operated by grumpy sysops, or are just running copies of NGINX.

All a load balancer does, is accept HTTP requests for your application (or from it), pick a server that isn’t very busy, and forward the request.

You can make Load balancers do all sorts of insane things that you probably shouldn’t use load balancers for. People will still try.

You might see load balancers load balancing a particularly “hot path” in your software onto a dedicated pool of hardware to try keep it safe or isolate it from failure. You might also see load balancers used to take care of SSL certificates for you – this is called SSL Termination.

Distributed caching

If one computer can store some data in memory, then lots of computers can store… well, a lot more data!

Distributed caching was pioneered by “Memcached” – originally written to scale the blogging platform Livejournal in 2003. At the time, Memcached helped Livejournal share cached copies of all the latest entries, across a relatively small number of servers, vastly reducing database server load on the same hardware.

Memory caches are used to store the result of something that is “heavy” to calculate, takes time, or just needs to be consistent across all the different computers running your server software. In exchange for a little bit of network latency, it makes the total amount of memory available to your application the sum of all the memory available across all your servers.

Distributed caching is also really useful for preventing “cache stampedes” – when a non-distributed cache fails, and cached data would be recalculated by all clients, but by sharing their memory, the odds of a full cache failure is reduced significantly, and even when it happens, only some data will be re-calculated.

Distributed caches are everywhere, and all the major hosting providers tend to support memcached or redis compatible (read: you can use memcached client libraries to access them) managed caches.

Understanding how a distributed cache works is remarkably simple – when an item is added, the key (the thing you use to retrieve that item) that is generated includes the address or name of the computer that’s storing that data in the cache. Generating keys on any of the computers that are part of the distributed cache cluster will result in the same key.

This means that when the client libraries that interact with the cache are used, they understand which computer they must call to retrieve the data.

Breaking up large pools of shared memory like this is smart, because it makes looking things up exceptionally fast – no one computer needs to scan huge amounts of memory to retrieve an item.

Content Delivery Networks (CDNs)

CDNs are web servers run by other people, all over the world. You upload your data to them, and they will replicate your data across all of their “edges” (a silly term that just means “to all the servers all over the world that they run”) so that when someone requests your content, the DNS response will return a server that’s close to them, and the time it takes them to fetch that content will be much quicker.

The mechanics of operating a CDN are vastly more complicated than using one – but they’re a great choice if you have a lot of static assets (images!) or especially big files (videos! large binaries!). They’re also super useful to reduce the overall load on your servers.

Offloading to a CDN is one of the easiest ways you can get extra performance for a very minimal cost.

Let’s talk about design patterns! That’s real architecture

“Design patterns are just bug fixes for your programming languages”

People will talk about design patterns as if they’re some holy grail – but all a design pattern is, is the answer to a problem that people solve so often, there’s an accepted way to solve it. If our languages, tools or frameworks were better, they would probably do the job for us (and in fact, newer language features and tools often obsolete design patterns over time).

Let’s do a quick run through of some very common ones:

- MVC – “Split up your data model, UI code, and business logic, so they don’t get confused”

- ORM – “Object-Relational mapping” – Use a mapping library and configured rules, to manage the storage of your in-memory objects, into relational storage. Don’t muddle the objects and where you save them together”.

- Active Record – “All your objects should be able to save themselves, because these are just web forms, who cares if they’re tied to the database!”

- Repository – “All your data access is in this class – interact with it to load things.”

- Decorator – “Add or wrap ‘decorators’ around an object, class or function to add common behaviour like caching, or logging without changing the original implementation.”

- Dependency Injection – “If your class or function depends on something, it’s the responsibility of the caller (often the framework you’re using) to provide that dependency”

- Factory – “Put all the code you need to create one of these, in one place, and one place only”

- Adapter – “Use an adapter to bridge the gap between things that wouldn’t otherwise work together – translating internal data representations to external ones. Like converting a twitter response into YourSocialMediaDataStructure”

- Command – “Each discrete action or request, is implemented in a single place”

- Strategy – “Define multiple ways of doing something that can be swapped in and out”

- Singleton – “There’s only one of these in my entire application”.

That’s a non-exhaustive list of some of the pattern jargon you’ll hear. There’s nothing special about design patterns, they’re just the 1990s version of an accepted and popular stackoverflow answer.

Microservice architectures

Microservice architectures are just the “third wave” of Service Oriented Design.

Where did they come from?

In the mid-90s, “COM+” (Component Services) and SOAP were popular because they reduced the risk of deploying things, by splitting them into small components – and providing a standard and relatively simple way to talk across process boundaries. This led to the popularisation of “3-tier” and later “n-tier” architecture for distributed systems.

N-Tier really was a shorthand for “split up the data-tier, the business-logic-tier and the presentation-tier”. This worked for some people – but suffered problems because horizontal slices through a system often require changing every “tier” to finish a full change. This ripple effect is bad for reliability.

Product vendors then got involved, and SOAP became complicated and unfashionable, which pushed people towards the “second wave” – Guerrilla SOA. Similar design, just without the high ceremony, more fully vertical slices, and less vendor middleware.

This led to the proliferation of smaller, more nimble services, around the same time as Netflix were promoting hystrix – their platform for latency and fault tolerant systems.

The third wave of SOA, branded as Microservice architectures (by James Lewis and Martin Fowler) – is very popular, but perhaps not very well understood.

What Microservices are supposed to be: Small, independently useful, independently versionable, independently shippable services that execute a specific domain function or operation.

What Microservices often are: Brittle, co-dependent, myopic services that act as data access objects over HTTP that often fail in a domino like fashion.

Good microservice design follows a few simple rules

- Be role/operation based, not data centric

- Always own your data store

- Communicate on external interfaces or messages

- What changes together, and is co-dependent, is actually the same thing

- All services are fault tolerant and survive the outages of their dependencies

Microservices that don’t exhibit those qualities are likely just secret distributed monoliths. That’s ok, loads of people operate distributed monoliths at scale, but you’ll feel the pain at some point.

Hexagonal Architectures

Now this sounds like some “Real Architecture TM”!

Hexagonal architectures, also known as “the ports and adapters” pattern – as defined by Alistair Cockburn, is one of the better pieces of “real application architecture” advice.

Put simply – have all your logic, business rules, domain specific stuff – exist in a form that isn’t tied to your frameworks, your dependencies, your data storage, your message busses, your repositories, or your UI.

All that “outside stuff”, is “adapted” to your internal model, and injected in when required.

What does that really look like? All your logic is in files, modules or classes that are free from framework code, glue, or external data access.

Why? Well it means you can test everything in isolation, without your web framework or some broken API getting in the way. Keeping your logic clear of all these external concerns is safe way to design applications.

There’s a second, quite popular approach described as “Twelve Factor Apps” – which mostly shares these same design goals, with a few more prescriptive rules thrown on top.

Scaling

Scaling is hard if you try do it yourself, so absolutely don’t try do it yourself.

Use vendor provided, cloud abstractions like Google App Engine, Azure Web Apps or AWS Lambda with autoscaling support enabled if you can possibly avoid it.

Consider putting your APIs on a serverless stack. The further up the abstraction you get, the easier scaling is going to be.

Conventional wisdom says that “scaling out is the only cost-effective thing”, but plenty of successful companies managed to scale up with a handful of large machines or VMs. Scaling out gives you other benefits (often geo-distribution related, or cross availability zone resilience) but don’t feel bad if the only leaver you have is the one labelled “more power”.

Architectural patterns for distributed systems

Building distributed systems is harder than building just one app. Nobody really talks about that much, but it is. It’s much easier for something to fail when you split everything up into little pieces, but you’re less likely to go completely down if you get it right.

There are a couple of things that are always useful.

Circuit Breakers everywhere

Circuit breaking is a useful distributed system pattern where you model out-going connections as if they’re an electrical circuit. By measuring the success of calls over any given circuit, if calls start failing, you “blow the fuse”, queuing outbound requests rather than sending requests you know will fail.

After a little while, you let a single request flow through the circuit (the “half open” state), and if it succeeds, you “close” the circuit again and let all the queued requests flow through.

Circuit breakers are a phenomenal way to make sure you don’t fail when you know you might, and they also protect the service that is struggling from being pummelled into oblivion by your calls.

You’ll be even more thankful for your circuit breakers when you realise you own the API you’re calling.

Idempotency and Retries

The complimentary design pattern for all your circuit breakers – you need to make sure that you wrap all outbound connections in a retry policy, and a back-off.

What does this mean? You should design your calls to be non-destructive if you double submit them (idempotency), and that if you have calls that are configured to retry on errors, that perhaps you back off a little (if not exponentially) when repeated failures occur – at the very least to give the thing you’re calling time to recover.

Bulkheads

Bulkheads are inspired by physical bulkheads in submarines. When part of a submarines hull is compromised, the bulkheads shut, preventing the rest of the sub from flooding. It’s a pretty cool analogy, and it’s all about isolation.

Reserved resources, capacity, or physical hardware can be protected for pieces of your software, so that an outage in one part of your system doesn’t ripple down to another.

You can set maximum concurrency limits for certain calls in multithreaded systems, make judicious use of timeouts (better to timeout, than lock up and fall over), and even reserve hardware or capacity for important business functions (like checkout, or payment).

Event driven architectures with replay / message logs

Event / message-based architectures are frequently resilient, because by design the inbound calls made to them are not synchronous. By using events that are buffered in queues, your system can support outage, scaling up and scaling down, and rolling upgrades without any special consideration. It’s normal mode of operation is “read from a queue”, and this doesn’t change in exceptional circumstances.

When combined with the competing consumers pattern – where multiple processors race to consume messages from a queue – it’s easy to scale out for good performance with queue and event driven architectures.

Do I need Kubernetes for that?

No. You probably don’t have the same kind of scaling problems as Google do.

With the popularisation of docker and containers, there’s a lot of hype gone into things that provide “almost platform like” abstractions over Infrastructure-as-a-Service. These are all very expensive and hard work.

If you can in any way manage it, use the closest thing to a pure-managed platform as you possibly can. Azure App Services, Google App Engine and AWS Lambda will be several orders of magnitude more productive for you as a programmer. They’ll be easier to operate in production, and more explicable and supported.

Kubernetes (often irritatingly abbreviated to k8s, along with it’s wonderful ecosystem of esoterically named additions like helm, and flux) requires a full time ops team to operate, and even in “managed vendor mode” on EKS/AKS/GKS the learning curve is far steeper than the alternatives.

Heroku? App Services? App Engine? Those are things you’ll be able to set up, yourself, for production, in minutes to only a few hours.

You’ll see pressure to push towards “Cloud neutral” solutions using Kubernetes in various places – but it’s snake oil. Being cloud neutral simply means you pay the cost of a cloud migration (maintaining abstractions, isolating your way from useful vendor specific features) perpetually, rather than in the (exceptionally unlikely) scenario that you’re switching cloud vendor.

The responsible use of technology includes using the thing most suited to the problem and scale you have.

Sensible Recommendations

Always do the simplest thing that can possibly work. Architecture has a cost, just like every abstraction. You need to be sure you’re getting a benefit before you dive into to some complex architectural patterns.

Most often, the best architectures are the simplest and most amenable to change.