Software development transforms human requirements, repeatedly, until software is eventually produced.

We transform things we’d like into feature requests.

Which are subsequently decomposed into designs.

Which are eventually transformed into working software.

At each step in this process the information becomes denser, more concrete, more specific.

In agile software development, user stories are a brief statement of what a user wants a piece of software to do. User stories are meant to represent a small, atomic, valuable change to a software system.

Sounds simple right?

But they’re more than that – user stories are artifacts in agile planning games, they’re triggers that start conversations, tools used to track progress, and often the place that a lot of “product thinking” ends up distilled. User stories end up as the single source of truth of pending changes to software.

Because they’re so critically important to getting work done, it’s important to understand them – so we’re going to walk through what exactly user stories are, where they came from, and why we use them.

The time before user stories

Before agile reached critical mass, the source of change for software systems was often a large specification that was often the result of a lengthy requirements engineering process.

In traditional waterfall processes, the requirements gathering portion of software development generally happened at the start of the process and resulted in a set of designs for software that would be written at a later point.

Over time, weaknesses in this very linear “think -> plan -> do” approach to change became obvious. The specifications that were created ended up in systems that often took a long time to build, didn’t finish, and full of defects that were only discovered way, way too late.

The truth was that the systems as they were specified were often not actually what people wanted. By disconnecting the design and development of complicated pieces of software, frequently design decisions were misinterpreted as requirements, and user feedback was hardly ever solicited until the very end of the process.

This is about as perfect a storm as can exist for requirements – long, laborious requirement capturing processes resulting in the wrong thing being built.

To make matters worse, because so much thought-work was put into crafting the specifications at the beginning of the process, they often brought out the worst in people; specs became unchangeable, locked down, binding things, where so much work was done to them that if that work was ever invalidated, the authors would often fall foul of the sunk cost fallacy and just continue down the path anyway because it was “part of the design”.

The specifications never met their goals. They isolated software development from it’s users both with layers of people and management. They bound developers to decisions made during times of speculation. And they charmed people with the security of “having done some work” when no software was being produced.

They provided a feedback-less illusion of progress.

“But not my specifications!” I hear you cry.

No, not all specifications, but most of them.

There had to be a better way to capture requirements that:

- Was open to change to match the changing nature of software

- Could operate at the pace of the internet

- Didn’t divorce the authors of work from the users of the systems they were designing

- Were based in real, measurable, progress.

The humble user story emerged as the format to tackle this problem.

What is a user story

A user story is a short, structured statement of a change to a system. They should be outcome focused , precise, and non-exhaustive.

Stories originated as part of physical work-tracking systems in early agile methods – they were handwritten on the front of index cards, with acceptance criteria written on the reverse of the card. The physical format added constraints to user stories that are still useful today.

Their job is to describe an outcome , and not an implementation. They’re used as artefacts in planning activities, and they’re specifically designed to be non-exhaustive – containing only the information absolutely required as part of a change to a product.

It’s the responsibility of the whole team to make sure our stories are high enough quality to work from, and to verify the outcomes of our work.

Furthermore, user stories are an exercise in restraint. They do not exist to replace documentation. They do not exist to replace conversation and collaboration. The job is to decompose large, tough, intractable problems into small, articulated, well considered changes.

User stories are meant to represent a small, atomic, valuable change to a software system and have mostly replaced traditional requirements engineering from the mid-2000s onwards.

The user story contents

The most common user story format, and generally the one that should be followed by default, was popularised by the XP team at Connextra in 2001. It looks like this:

As a <persona>

I want <business focused outcome>

So that <reason driving the change>

Accept:

- List of…

- Acceptance criteria…

Notes:

Any notes

This particular format is popular because it considers both the desired outcome from a user’s perspective (the persona), and also includes the product thinking or justification for the change as part of the “So that” clause.

By adhering to the constraint of being concise, the story format forces us to decompose our work into small, deliverable chunks. It doesn’t prevent us from writing “build the whole solution”, but it illuminates poorly written stories very quickly.

Finally, the user story contains a concise, non-exhaustive list of acceptance criteria. Acceptance criteria list the essential qualities of the implemented work. Until all of them are met, the work isn’t finished.

Acceptance criteria aren’t an excuse to write a specification by stealth. They are not the output format of response documents when you’re building APIs, or snippets of HTML for web interfaces. They’re conversation points to verify and later accept the user story as completed.

Good acceptance criteria are precise and unambiguous – anything else isn’t an acceptance criteria. As an example – “must work in IE6” is better than “must work in legacy browsers”, equally “must be accessible” is worse than “must adhere to all WCAG 2.0 recommendations”.

Who and what is a valid persona?

Personas represent the users of the software that you are building.

This is often mistaken to mean “the customers of the business” and this fundamental misunderstanding leads to lots of unnatural user stories being rendered into reality.

Your software has multiple different types of users – even users you don’t expect. If you’re writing a web application, you might have personas that represent “your end user”, “business to business customers”, or other customer architypes. In addition to this, however, you’ll often have personas like “the on call engineer supporting this application”, “first line support” or “the back-office user who configures this application”.

While they might not be your paying customers, they’re all valid user personas and users of your software.

API teams often fall into the trap of trying to write user stories from the perspective of the customer of the software that is making use of their API. This is a mistake, and it’s important that if you’re building APIs, you write user stories from the perspective of your customers – the developers and clients that make use of your APIs to build consumer facing functionality.

What makes a good user story?

While the vast majority of teams use digital tracking systems today, we should pay mind to the constraints placed upon user stories by physical cards and not over-write our stories. It’s important to remember that user stories are meant to contain distilled information for people to work from.

As the author of a user story, you need to be the world’s most aggressive editor – removing words that introduce ambiguity, removing any and all repetition and making sure the content is precise. Every single word you write in your user story should be vital and convey new and distinct information to the reader.

It’s easy to misinterpret this as “user stories must be exhaustive”, but that isn’t the case. Keep it tight, don’t waffle, but don’t try and reproduce every piece of auxiliary documentation about the feature or the context inside every story.

For example:

As a Back-Office Manager

I want business events to be created that describe changes to, or events happening to, customer accounts that are of relevance to back-office management

So that those events may be used to prompt automated decisions on changing the treatment of accounts based on back-office strategies that I have configured.

Could be re-written:

As a Back-Office Manager

I want an event published when a customer account is changed

So that downstream systems can subscribe to make decisions

Accept:

- Event contains kind of change

- Event contains account identifiers

- External systems can subscribe

In this example, edited, precise language makes the content of the story easier to read , and moving some of the nuance to clearly articulated acceptance criteria prevent the reader having to guess what is expected.

Bill West put together the mnemonic device INVEST , standing for Independent, Negotiable, Verifiable, Estimable, Small and Testable – to describe characteristics of a good user story – but in most cases these qualities can be met by remembering the constraints of physical cards.

If in doubt, remember the words of Ernest Hemingway:

“If I started to write elaborately, or like someone introducing or presenting something, I found that I could cut that scrollwork or ornament out and throw it away and start with the first true simple declarative sentence I had written.”

Write less.

The joy of physical limitations

Despite the inevitability of a digital, and remote-first world, it’s easy to be wistful for the days of user stories in their physical form, with their associated physical constraints and limitations.

Stories written on physical index cards are constrained by the size of the cards – this provides the wonderful side effect of keeping stories succinct – they cannot possibly bloat or become secret specifications because the cards literally are not big enough.

The scrappy nature of index cards and handwritten stories also comes with the additional psychological benefit of making them feel like impermanent, transitory artefacts that can be torn up and rewritten at will, re-negotiated, and refined, without ceremony or loss. By contrast, teams can often become attached to tickets in digital systems, valuing the audit log of stories moved back and forth and back and forth from column to column as if it’s more important than the work it’s meant to inspire and represent.

Subtasks attached to the index-card stories on post-it notes become heavy and start falling apart, items get lost, and the cards sag, prompting and encouraging teams to divide bloated stories into smaller, more granular increments. Again, the physicality of the artefact bringing its own benefit.

Physical walls of stories are ever present, tactile, and real. Surrounding your teams with their progress helps build a kind of total immersion that digital tools struggle to replicate. Columns on a wall can be physically constrained, reconfigured in the space, and visual workspaces built around the way work and tasks flow, rather than how a developer at a work tracking firm models how they presume you work.

There’s a joy in physical, real, artefacts of production that we have entirely struggled to replicate digitally. But the world has changed, and our digital workflows can be enough, but it takes work to not become so enamoured and obsessed with the instrumentation, the progress reports, and the roll-up statistics and lose sight of the fact that user stories and work tracking systems were meant to help you complete some work, to remember that they are the map and not the destination.

All the best digital workflows succeed by following the same kinds of disciplines and following the same constraints as physical boards have. Digital workflows where team members feel empowered to delete and reform stories and tickets at any point. Where team members can move, refine, and relabel the work as they learn. And where teams do what’s right for their project and worry about how to report on it afterwards, find the most success with digital tools.

It’s always worth acknowledging that those constraints helped give teams focus and are worth replicating.

What needs to be expressed as a user story?

Lots of teams get lost in the weeds when they try to understand “what’s a user story” vs “what’s a technical task” vs “what’s a technical debt card”. Looking backwards towards the original physical origin of these artefacts it’s obvious – all these things are the same thing.

Expressing changes as user stories with personas and articulated outcomes is valuable whatever the kind of change. It’s a way to communicate with your team that everyone understands, and it’s a good way to keep your work honest.

However, don’t fall into the trap of user story theatre for small pieces of work that need to happen anyway.

I’d not expect a programmer to see a missing unit test and write a user story to fix it - I’d expect them to fix it. I’d not expect a developer to write a “user story” to fix a build they just watched break. This is essential, non-negotiable work.

As a rule of thumb, technical things that take less time to solve than write up should just be fixed rather than fudging language to artificially legitimise the work – it’s already legitimate work.

Every functional change should be expressed as a user story – just make sure you know who the change is for. If you can’t articulate who you’re doing some work for, it is often a symptom of not understanding the audience of your changes (at best) or at worst, trying to do work that needn’t be done at all.

The relationship between user stories, commits, and pull requests

Pull request driven workflows can suffer from the unfortunate side-effect of encouraging deferred integration and driving folks towards “one user story, one pull request” working patterns. While this may work fine for some categories of change, it can be problematic for larger user stories.

It’s worth remembering when you establish your own working patterns that there is absolutely nothing wrong with multiple sets of changes contributing to the completion of a single user story. Committing the smallest pieces of work that doesn’t break your system is safer by default.

The sooner you’re integrating your code, the better, regardless of story writing technique.

What makes a bad user story?

There are plenty of ways to write poor quality user stories, but here are a few favourites:

Decomposed specifications / Design-by-stealth – prescriptive user stories that exhaustively list outputs or specifications as their acceptance criteria are low quality. They constrain your teams to one fixed solution and in most cases don’t result in high quality work from teams.

Word Salad – user stories that grow longer than a paragraph or two almost always lead to repetition or interpretation of their intent. They create work, rather than remove it.

Repetition or boiler-plate copy/paste – Obvious repetition and copy/paste content in user stories invents work and burdens the readers with interpretation. It’s the exact opposite of the intention of a user story, which is to enhance clarity. The moment you reach for CTRL+V/C while writing a story, you’re making a mistake.

Given / Then / When or test script syntax in stories – user stories do not have to be all things to all people. Test scripts, specifications or context documents have no place in stories – they don’t add clarity, they increase the time it takes to comprehend requirements. While valuable, those assets should live in wikis, and test tools, respectively.

Help! All my stories are too big! Sequencing and splitting stories.

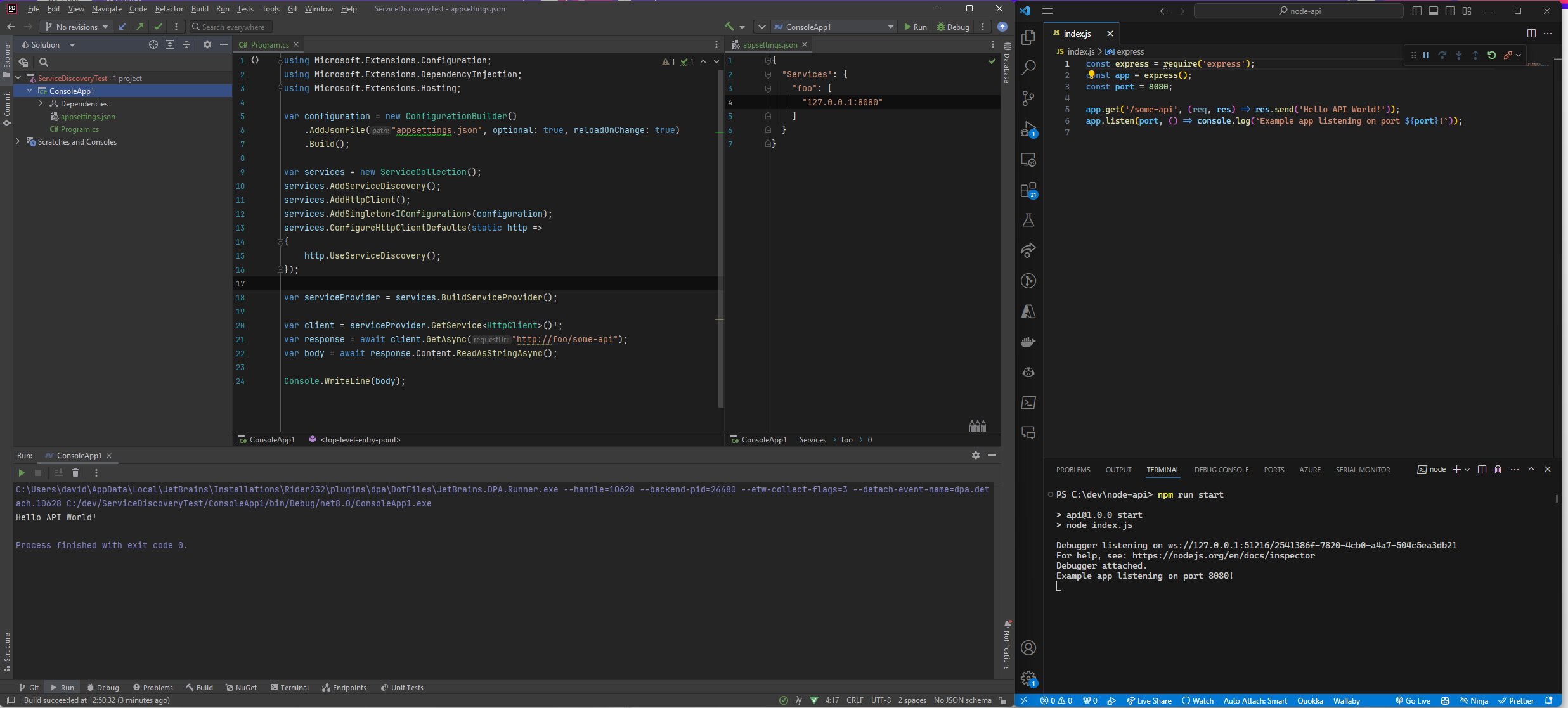

Driving changes through user stories becomes trickier when the stories require design exercises , or the solution in mind has some pre-requirements (standing up new infrastructure for the first time etc. It’s useful to split and sequence stories to make larger pieces of technical work easier while still being deliverable in small chunks.

Imagine, for example, a user story that looked like this:

As a customer

I want to call a customer API

To retrieve the data stored about me, my order history, and my account expiry date

On the surface the story might sound reasonable, but if this were a story for a brand new API, your development team would soon start to spiral out asking questions like “how does the customer authenticate”, “what data should we return by default”, “how do we handle pagination of the order history” and lots of other valid questions that soon represent quite a lot of hidden complexity in the work.

In the above example, you’d probably split that work down into several smaller stories – starting with the smallest possible story you can that forms a tracer bullet through the process that you can build on top of.

Perhaps it’d be this list of stories:

- A story to retrieve the user’s public data over an API. (Create the API)

- A story to add their account expiry to that response if they authenticate. (Introduce auth)

- A story to add the top-level order summary (totals, number of previous orders)

- A story to add pagination and past orders to the response

This is just illustrative, and the exact way you slice your stories depends heavily on context – but the themes are clear – split your larger stories into smaller useful shippable parts that prove and add functionality piece by piece. Slicing like this removes risk from your delivery , allows you to introduce technical work carried by the story that needs it first, and keeps progress visible.

Occasionally you’ll stumble up against a story that feels intractable and inestimable. First, don’t panic, it happens to everyone, breathe. Then, write down the questions you have on a card. These questions form the basis of a spike – a small Q&A focused time-boxed story that doesn’t deliver user-facing value. Spikes exist to help you remove ambiguity, to do some quick prototyping, to learn whatever you need to learn so that you can come back and work on the story that got blocked.

Spikes should always pose a question and have a defined outcome – be it example code, or documentation explaining what was learnt. They’re the trick to help you when you don’t seem to be able to split and sequence your work because there are too many unknowns.

Getting it right

You won’t get your user stories right first time – but much in the spirit of other agile processes you’ll get better at writing and refining user stories by doing it. Hopefully this primer will help you avoid trying to boil the ocean and lead to you building small things, safely.

If you’re still feeling nervous about writing high quality user stories with your teams Henrik Kniberg and Alistair Cockburn published a workshop they called “The Elephant Carpaccio Exercise” in 2013 which will help you practice in a safe environment. You can download the worksheet here - Elephant Carpaccio facilitation guide (google.com)